Data Mesh for Enterprise Query Performance in a Multi-Tenant environment

Mar 27,2023

This client realized a successful integration of a multi-tenant distributed data mesh and self-service consumption zone in near real-time at a Global Hosting Enterprise with the support of Intuitive.Cloud certified engineers.

Challenges

Design and implement a code migration strategy from legacy Cloudera to Databricks on GCP. The goal was to provide a true Data and Application delivery system that would exist in a multi-cloud environment with a single DevSecOps Strategy.

The company faced several challenges during the technology integration process, including:

- Resistance to Change

- Integration with Existing Systems

- Training and Support

- Security and Compliance

- Return on Investment

Technology Solutions

Intuitive successfully integrated the following innovative technologies:

- Hybrid Cloud Infrastructure (AWS & GCP)

- Hybrid Ingestion Framework (Batch and Streaming Data Paths)

- Databricks (Transformation and Delta Table Staging)

- Snowflake (Multi-cloud Active/Active Consumption Layer)

- Source to Destination Data Orchestration pipelines

Implementation Strategy

Our customer needed to transform its current infrastructure application environments to the Amazon (AWS) & Google Cloud Platform (GCP). The multi-cloud platform will provide a universally available and scalable environment for the customers applications and database conversion from Hive Query Language (HQL) to Spark SQL and allow to expand for future consumption.

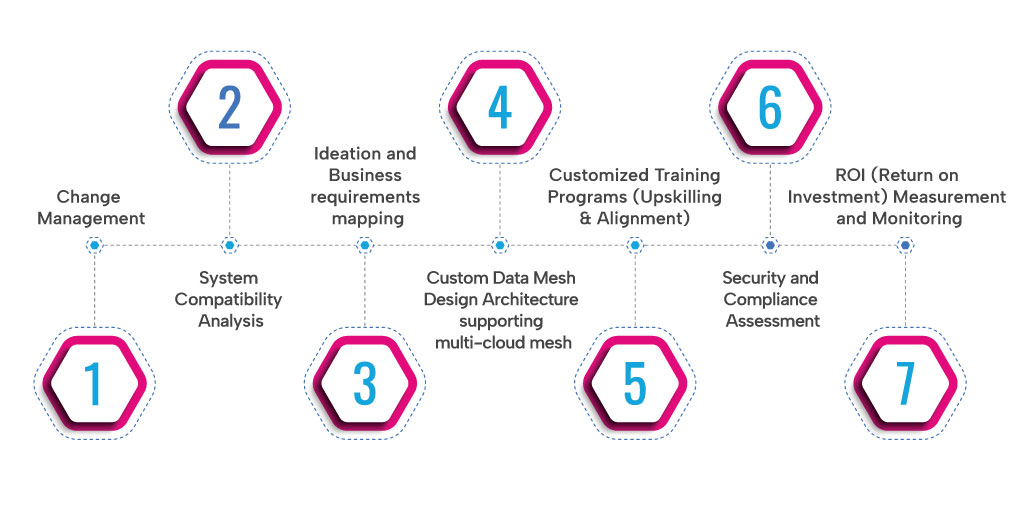

Intuitive employed a step-by-step approach to overcome the challenges faced during the integration process:

Results and Impact

The Platform automation choice would be using Terraform and the combination of Kafka, Databricks, and Airflow to spin up using these scripts. The Customer has informed Intuitive the internal DevOps team has already completed this work in GCP. Intuitive will ensure the code will have to run via configurations, analyze and document best practices.

This section outlines the measurable results and the overall impact of the integrated technologies on customers operations, including:

- Increased Efficiency

- Enhanced Customer Experience

- Streamlined Decision-Making

- Improved Security and Compliance

- Positive ROI

Intuitive provided the highest level of team engagement and technical expertise. Their alignment with our internal teams and partners was pivotal to the success of the project. - Chief Architect

Lessons Learned

A data mesh project is an innovative approach to managing data in an organization that emphasizes decentralization and domain-driven ownership. Here are five lessons that can be learned from a data mesh project:

- Clear communication and collaboration are critical: A data mesh project requires extensive collaboration across departments and teams. Clear communication and collaboration between these teams are essential to ensure that everyone is working towards the same goals and that the project is successful.

- Domain-driven design is essential: In a data mesh project, data ownership is assigned to different domains or departments, each responsible for their own data products. Therefore, it is crucial to apply domain-driven design principles to ensure that data products are aligned with the needs of the domain and that they are of high quality.

- Data governance is crucial: Data governance is critical in a data mesh project to ensure that data is managed consistently across domains and departments. This includes setting up policies, procedures, and standards to ensure data quality, security, and compliance.

- Technology is an enabler, not a solution: Technology is essential to support the data mesh project, but it is not the solution itself. The right tools and platforms must be chosen to support the decentralized approach to data management, but they must be implemented and used effectively to achieve the desired outcomes.

- Continuous learning and improvement are necessary: A data mesh project is not a one-time project, but an ongoing journey of continuous learning and improvement. The project team must continuously assess and adapt their approach, processes, and technologies to ensure that they are meeting the needs of the organization and delivering value over time.

Conclusion

This case study demonstrates that with a well-planned implementation strategy and a commitment to continuous improvement, integrating innovative technologies can lead to significant benefits for a technology company. Our customers' experience serves as a valuable example for other organizations seeking to embrace modern technologies and drive business growth.