Why Containers are more popular than Virtual Machine?

By Jay Sheth, Het Desai, Dhruvesh Sheladiya / Oct 27,2022

Virtualization

Virtualization is quite an old concept. It began in the 1960s to logically divide the system resources provided by mainframe computers into individual applications. Being an old technology, it is still a relevant part of cloud computing.

Let us start with what virtualization is.

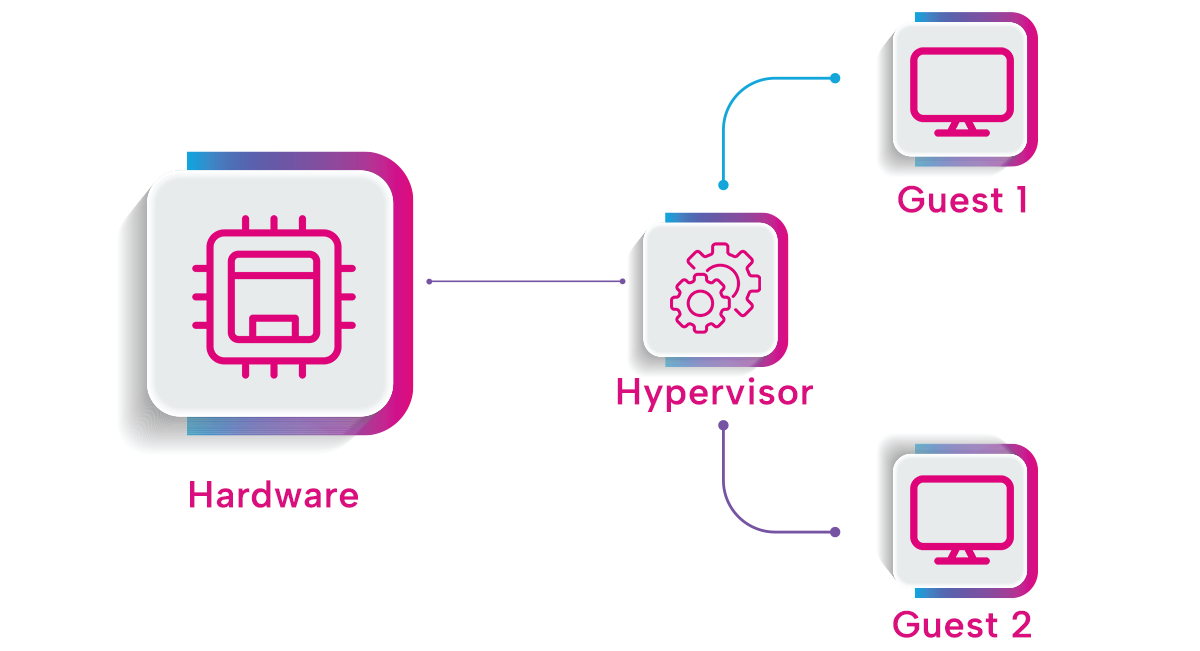

Virtualization uses software to create an abstract layer over hardware allowing hardware elements such as storage, computing, and memory to get distributed among multiple virtual machines (VM). Each VM has its separate operating system (OS), acting like an individual machine even though it shares the underlying hardware with multiple other VMs.

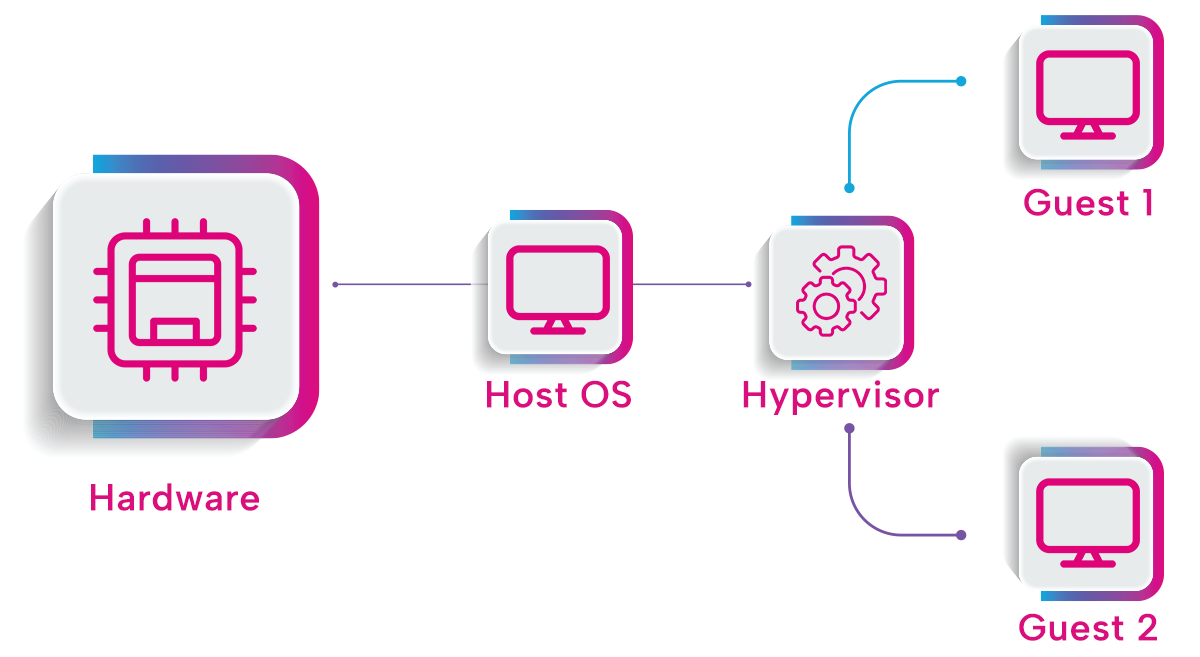

The abstraction layer we discussed earlier is a piece of software known as a Hypervisor. It is a crucial component in the virtualization process which serves as an interface between the VM and the underlying physical hardware. It ensures that VMs do not interrupt each other. It stands on top of a host or a physical server. The main task of a hypervisor is to pool resources from the physical server and allocate them to different virtual environments.

There are two types of hypervisors -

Type 1 or “bare metal” hypervisors A Type 1 hypervisor runs directly on the physical hardware of the underlying machine, interacting with its CPU, memory, and physical storage.

Type 1 or “bare metal” hypervisors A Type 2 hypervisor does not run directly on the underlying hardware. Instead, it runs as an application in an OS.

Virtual Machine

Now that you know what virtualization and hypervisor are, we can move on to VMs.

A virtual Machine or VM is no different than a physical device like your desktop or laptop. A VM and a physical device are both made of a CPU, memory, and disk. A physical device is a physical thing whereas a VM is a bunch of lines of code, that runs on a Hypervisor.

VMs create a virtual environment that simulates a physical computer. They comprise of some configuration files, the storage for the virtual hard drive, and some snapshots of the VM that preserve its state at a particular time.

What are Containers?

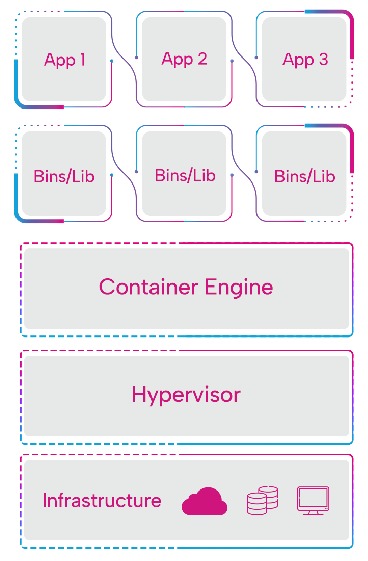

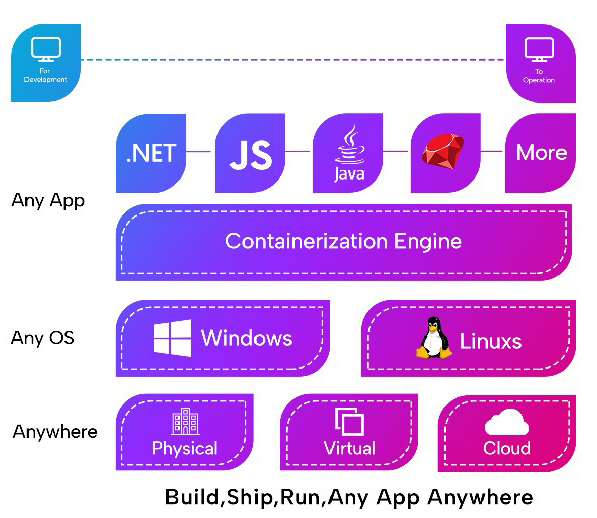

Containers are software components that package application code, along with required executables such as libraries, dependencies, binary codes, and configuration files, using operating system virtualization in a standardized manner. Containers can run on various platforms, including desktops, traditional IT environments, and cloud infrastructures.

Containers are lightweight, portable, and swift because they do not include operating system images, like virtual machines. As a result, they have less overhead and can leverage the features and resources of the host operating system, making them highly portable and easy to deploy

The container is like a traditional IT environment. It has hardware and an operating system. However, it also has a container engine on top of the hardware. The container engine software packages the libraries and dependencies within the container to enable the seamless movement of a container, from one machine to another.

Containers are gaining more attention, particularly in cloud environments. Several organizations are contemplating them as a substitute for VMs, as a general-purpose computing platform for their applications and workloads.

Difference between containers and virtual machines (VMs)

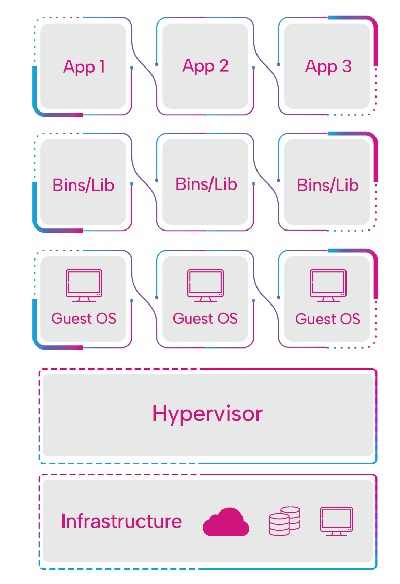

Containers and virtual machines are two distinct approaches to virtualizing computing resources. VMs virtualize all components down to the hardware level, generating multiple instances of operating systems on a single physical server. In contrast, containers virtualize solely the software layers above the operating system, forming lightweight packages that incorporate all the dependencies needed for a software application. Containers can operate more workloads on a single operating system instance than VMs, making them faster, more flexible, and more portable.

Compared to VMs, containers are more agile and portable. VMs rely on a hypervisor, an emulating software, that sits between the hardware and the virtual machine, to enable virtualization by managing the sharing of physical resources into virtual machines. Each virtual machine operates on its guest operating system. On the other hand, containers run on top of a physical server and its host operating system, allowing for greater agility and portability.

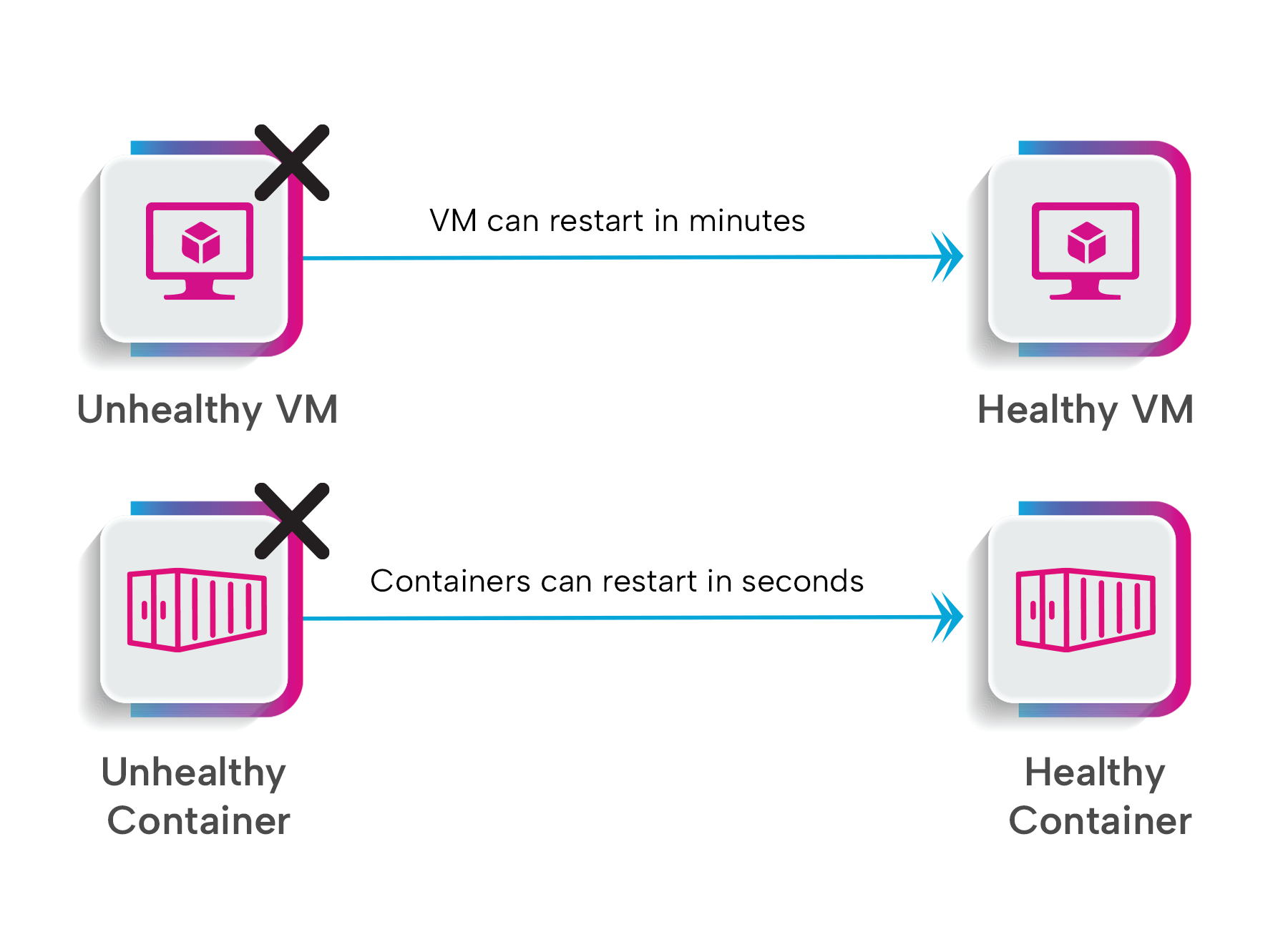

Both containers and VMs rely on an operating system that necessitates maintenance for fixing bugs and applying patches. However, containers do not encompass an OS kernel, instead, they leverage resources provided by the underlying host OS, making them notably smaller, agile, and more portable than VMs. Containers only require the application code to be included, whether it is a single monolithic application or microservices. They are packaged in one or more containers to perform a business function. While VMs can take minutes to spin up, containers can start up in milliseconds.

Use Cases of Containers

1. Increased developer’s productivity While testing an early version of an application, a developer can run it from their PC without hosting it on the main OS or creating a testing environment. Furthermore, containers eliminate problems with environment settings, handle scalability challenges, and simplify operations. Because containers solve numerous challenges, developers can concentrate on development rather than dealing with operations.

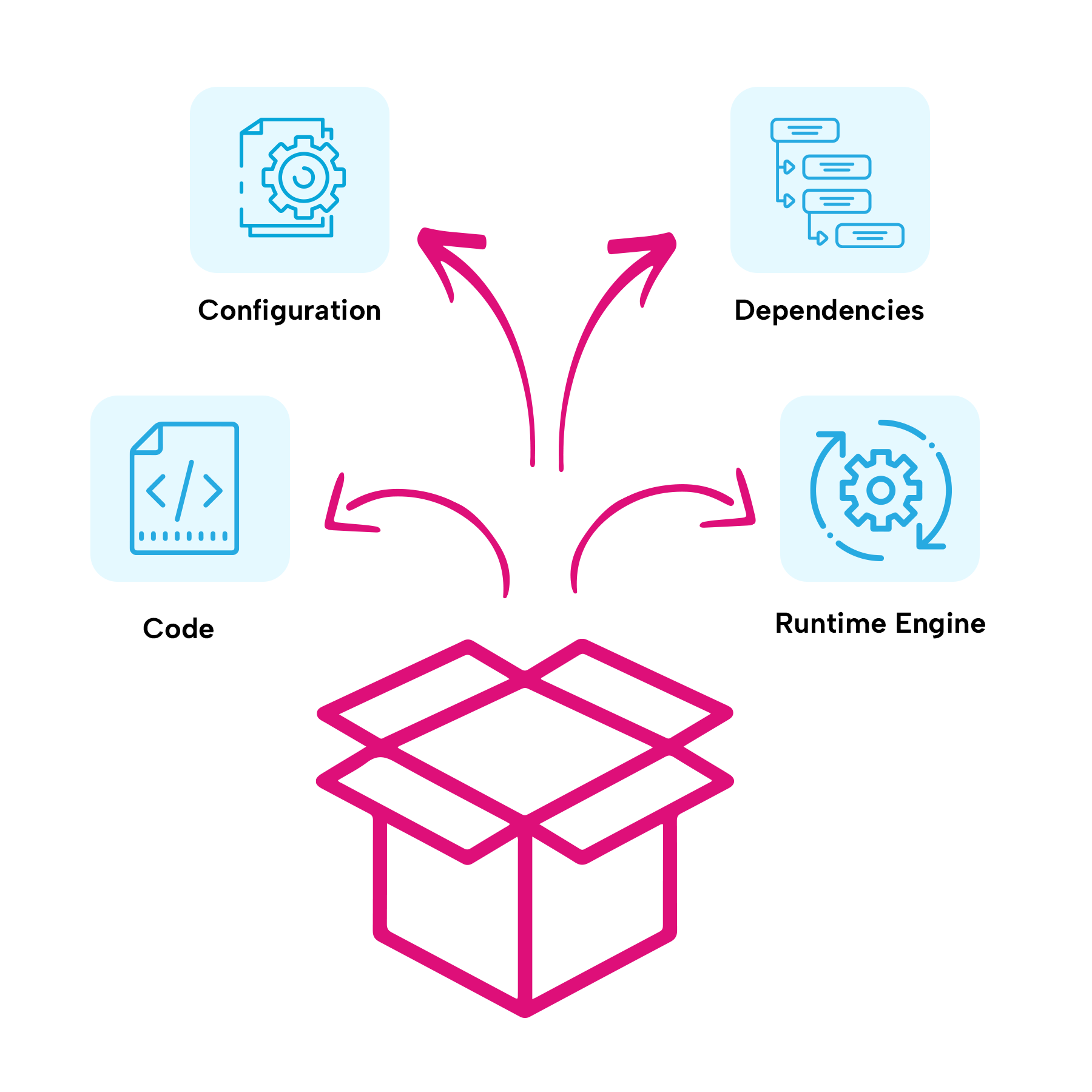

In these Configuration files, the code of the application, dependencies, and the runtime engine are packaged all together in a robust manner known as a container that can run on any environment independently.

2. Supporting continuous operations with little to no downtime In today's digital economy, downtime means much more than a brief power outage for mid-sized businesses. Customers will go elsewhere if they can't reach you because your system is down. Because container architecture provides a standardized mechanism to divide application into independent containers, container architecture is naturally advantageous in continuous operations.

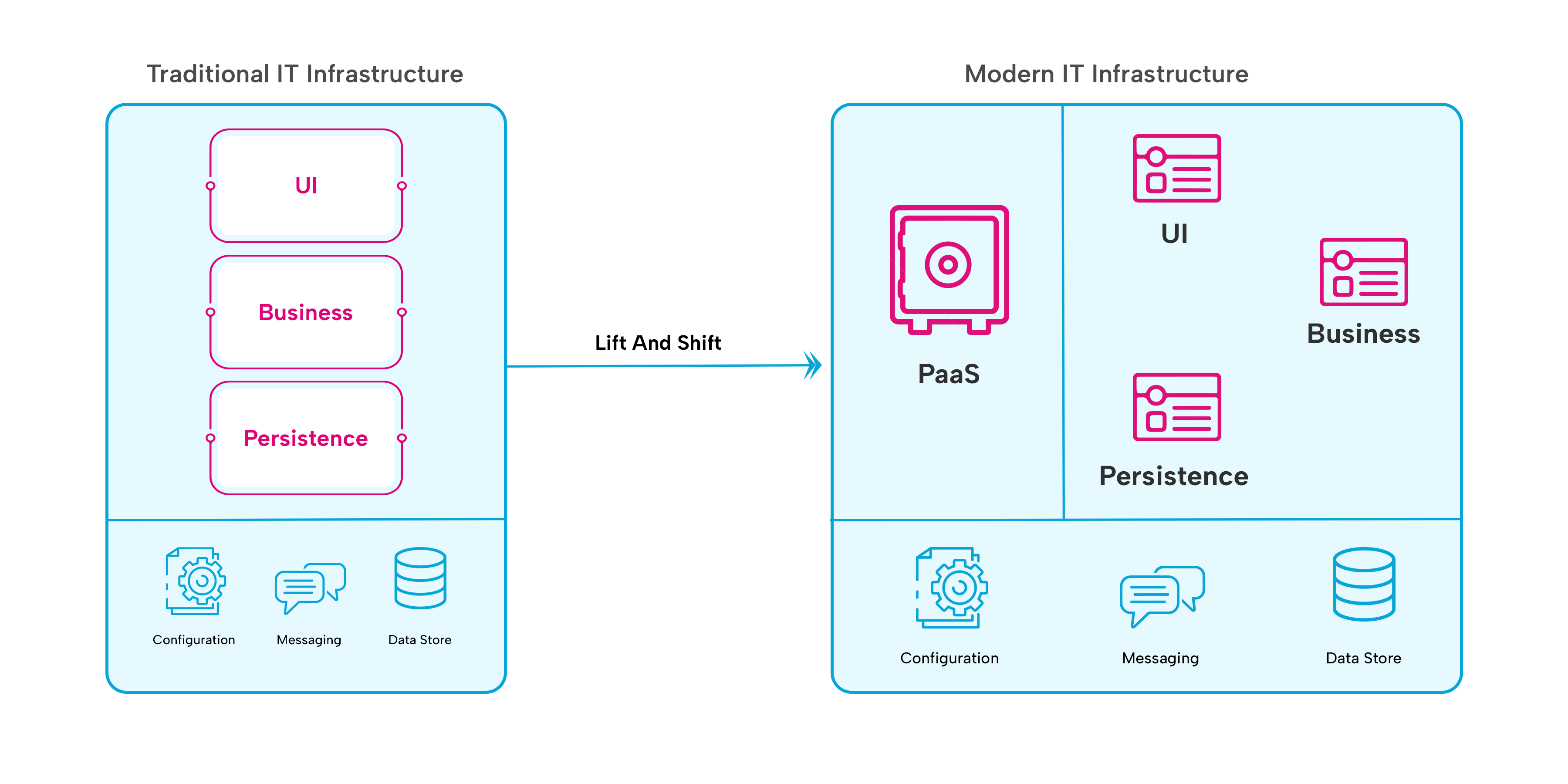

3. “Lift & shift” existing applications into modern cloud architectures The lift & shift approach involves creating individual containers for each tier, which has a code for a specific tier, along with their configuration details, and necessary runtime libraries and dependencies. Once the containers are configured to work together, they are deployable on public, private, or hybrid cloud environments. With lift & shift, organizations can leverage the benefits of new technologies by re-packaging the application instead of changing it.

Where the traditional architecture is just lifted and shifted to modern infrastructure, it uses containers without any changes in the existing code and configurations.

4. Containers can run on IoT devices: Using containers suits installing & updating applications on IoT devices. That is because containers encompass all the required software for the applications to function, making them easily transportable and lightweight, which is particularly beneficial for devices having restricted resources.

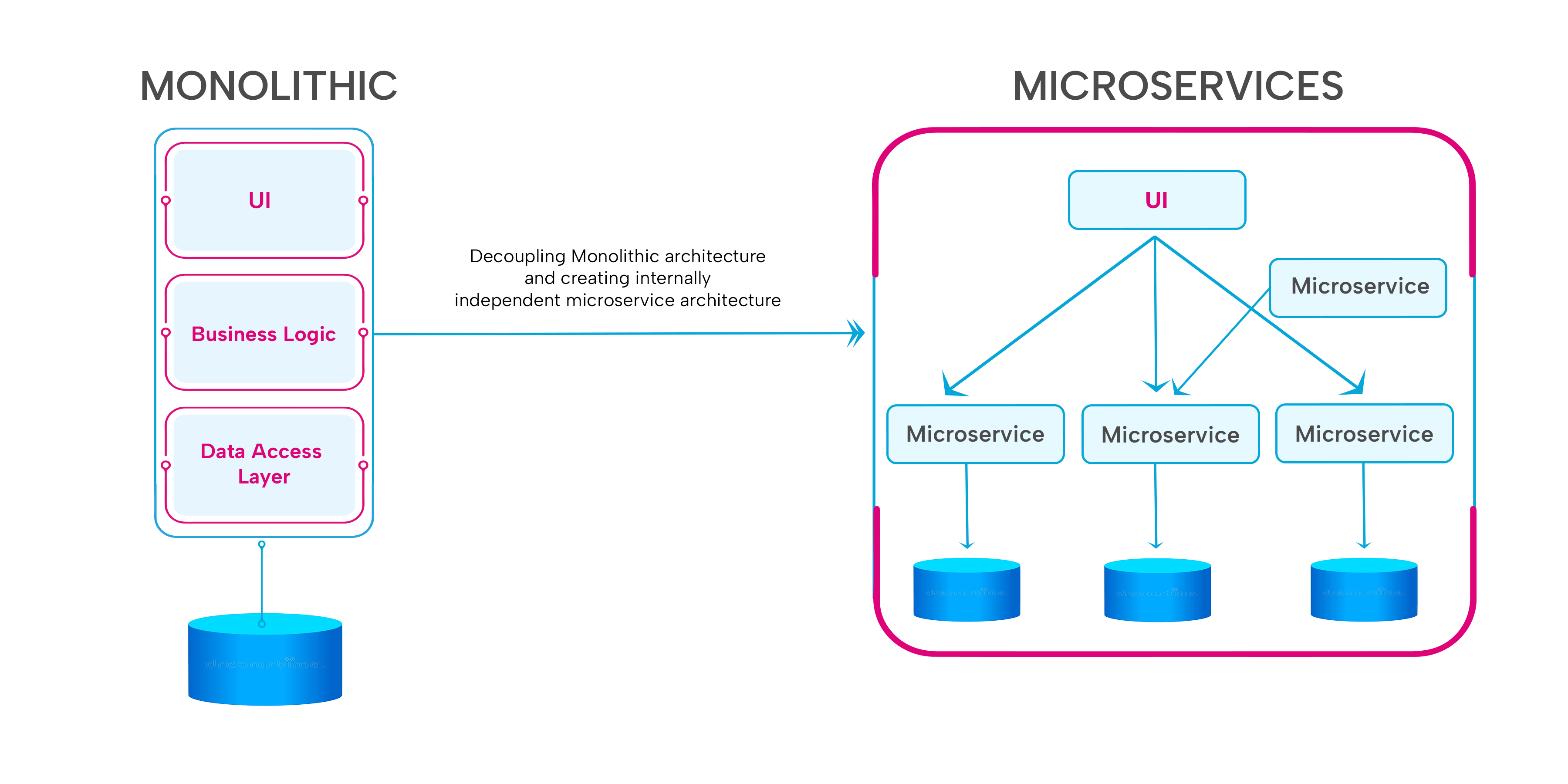

5. Great for Micro-service architecture Containers support microservice architectures, allowing for more precise deployment and scaling of application components. They are preferred over scaling up an entire monolithic application simply because one component is struggling with the load.

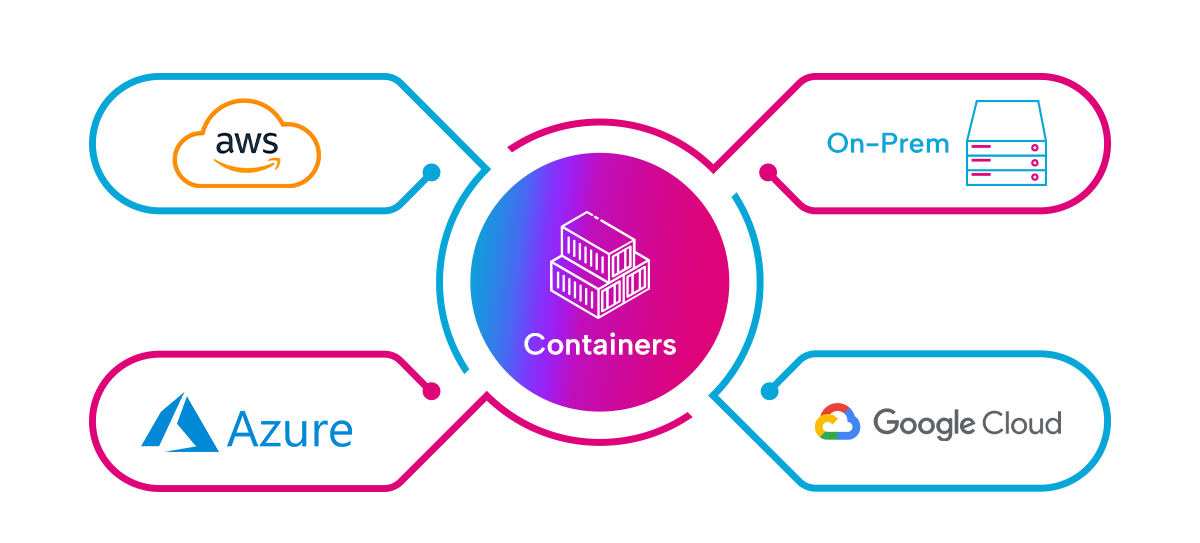

6. Hybrid and multi-cloud compatible: Containers provide flexibility in app deployment, allowing for the creation of a unified environment that can run on-premises and across multiple cloud platforms. This makes it possible to optimize costs and enhance operational efficiency by leveraging existing infrastructure and utilizing the benefits of different cloud providers with the workload.

Disadvantages of Containers

- Running malicious and rogue processes in containers. Containers have a short lifespan, so it becomes harder to monitor them or identify any malicious process.

- If an attacker succeeds in accessing the kernel from a container, all containers attached to it will be affected. As a result, VMs isolate applications better than containers. Because containers share the kernel of the OS, if the kernel becomes vulnerable, all the containers will become vulnerable.